Python is an object-oriented, high-level performing, general purposive programming language. Python is used to develop intuitive web applications and software, automate tasks, and handle data effectively. Python is highly flexible, versatile, and easy to use for beginners and hence is one of the most used programming languages.

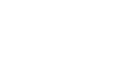

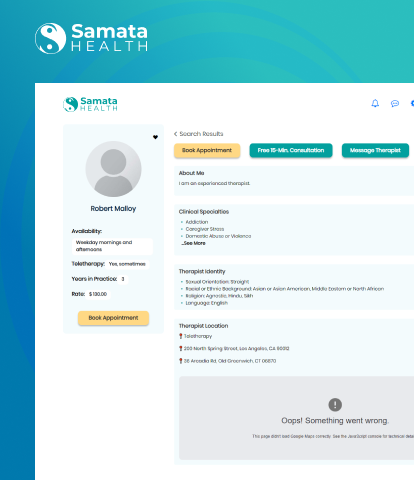

Outsourcing a Python Development Company to leverage Python development services can help you build high-performing customised web products specific to your market needs and demands. Our custom software development services ensure that we cater to the unique needs of various industries by delivering tailored solutions. To match with the digitally evolving tech market, you have to be updated all the time with cutting-edge digital products, and we, as your Python Development company partner, can help you achieve your mission.

Outsource your Python web development requirements to eSparkBiz, a leading Python Development Company in India, USA, and Canada. We have a team of skilled Python developers who have experience in building high-performing and low-latency applications using emerging technologies and frameworks like Flask and Web2py, Python 3.11.2, and Django. The growing importance of the Python programming language in the digital landscape makes it essential to choose the right solution provider. We offer a flexible hiring option that lets you hire according to your project needs and the duration of the work.