Quick Summary :- It is evident that the first five search results in Google gets the most traffic. Here comes the role of search engine optimization in your website. Developers and enterprise owners have become smart enough to implement SEO techniques right from the initial stage of development. Here is a comprehensive guide on using React for search engine optimization and tackling its problems.

React websites face a big challenge when it comes to Search Engine Optimization. Most of the Top-rated React Development Company focuses on client-side rendering, while Google bot focuses on server-side rendering. So, React For SEO will be a massive challenge.

Today, we will talk about using React For SEO problems. Also, we will learn the challenges which stop React from becoming SEO friendly, and finally, get familiar with some of the best practices for making your application rank higher in Google.

Common Indexing Issue With JavaScript Pages

Following are some of the most common issues with JavaScript pages which can impact ranking as well as indexing.

Slow and complex indexing process

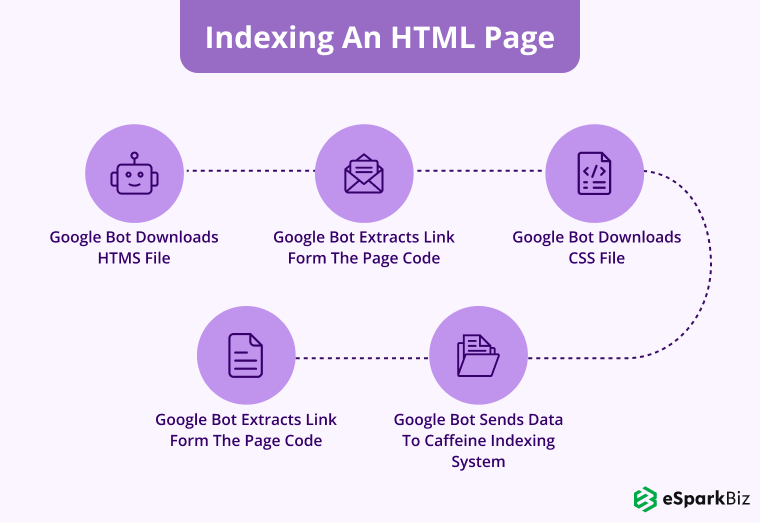

As we have already mentioned above, Google bots can crawl and understand the HTML pages. The following image will show you how it functions.

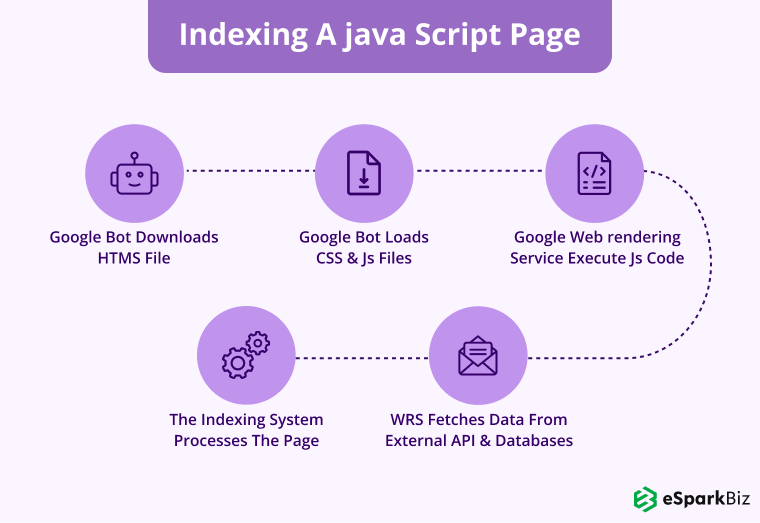

Google does all these activities within a second. However, when we talk about the JavaScript code, the process of indexing is very complicated. Have a look at the diagram given below:

Once all these steps are done, only then the Google bot will be able to find the new links and add them in a queue of crawling. This process is slower as compared to indexing the HTML page.

Errors in JavaScript code

JavaScript, as well as HTML, has its own different approaches to find errors. A simple issue in JavaScript code will create a problem in indexing.

The main reason behind it is that the parser of JavaScript is very intolerant to such issues. If the JavaScript parser gets to see the object in a different place, then it instantly stops parsing the script and displays the SyntaxError.

Hence a typo or a character can result in the inoperability of the script. In such a situation where the Google bot has started indexing the page, the bot will view it as an empty page and will show the page to the viewers as an empty page which doesn’t have any content.

Exhausted crawling budget

Crawling budget is specifically the ability of search engine bots to crawl a maximum number of pages in a particular period of time. This can be around 5 seconds for a script.

Many websites that are developed on JavaScript have experienced issues in indexing as Google has to wait more than 5 seconds for those scripts for loading, parsing, and executing.

Now the slow script is when the Google bot comes out of the crawling budget for a website and leaves it even without indexing.

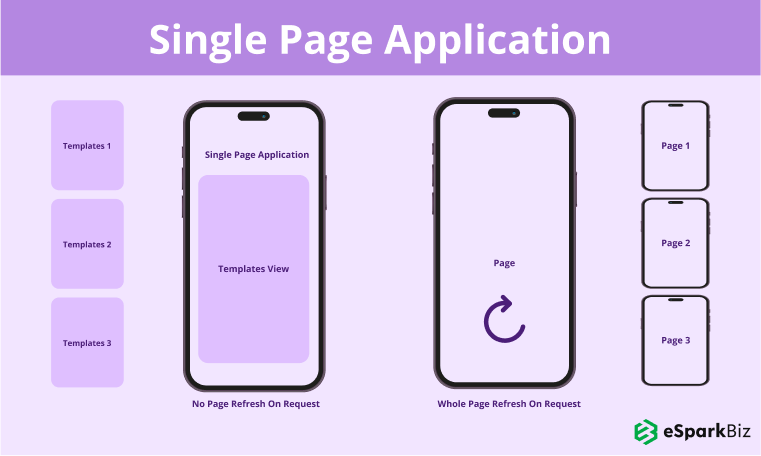

Challenges of indexing SPAs

SPA or rather we say Single Page Applications are basically the web applications developed with React. So, that’s a real challenge in React For SEO.

This web application has only a single page that is loaded at once. And other information is being loaded when they are required.

These apps are fast as compared to the traditional multiple page apps and are capable enough to provide a smooth user experience.

But after having all these benefits, it has some drawbacks when it comes to SEO. Single Page Applications have some limitations in Search Engine Optimization.

These web applications can only provide content when the page is being loaded.

If the Google bot is crawling the page and the content has not been loaded yet then the users will see it as an empty page. In such a situation the website will face issues in ranking in SERPs.

Why is React Different?

You may face some problems while doing search engine optimization for the vanilla React app.

Some of its common problems are:

- The entire application has only a single URL.

- Meta Tags are not placed properly.

- JS takes too much time to load.

Now if you are thinking about why these issues come up then we should first read how actually React works.

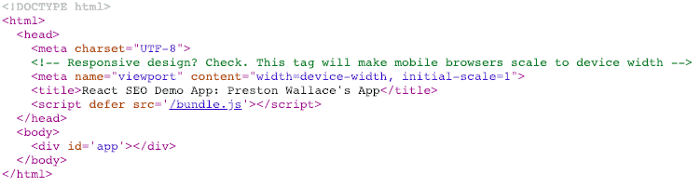

For developing a Single Page App, we will design an app that has its ability to create its own “virtual” DOM and then add it in a single tag-based static HTML file.

Now from this step, the app will be developed as well as loaded. Of course, there could be various URLs which are being used for callback URLs and API requests from other apps or OAuth

But the site that we have created will always be loaded as a single page with multiple views known as Components in React.

The Good Of React

What makes React different from others is it provides its users access to dynamically control the app or page that is being used. This is also the reason why businesses prefer to hire ReactJS Developer so frequently these days.

The views of the application or website can change instantly as the components are able to reuse code anytime. Hence creating no extra overhead for the page refresh function.

The Bad Of React

There are some technical issues that vanilla React apps face. They are:

One URL to rule

The main problem SPA poses for SEO is that without any sort of editing or modification, it has only one URL. If there is truly one HTML file, then there could have been one URL. It’s an important aspect of React For SEO.

Here all the MetaData is placed between the header tags. And the components of React are rendered in the body section which makes it almost impossible to change the meta tags.

No Provision For Meta tags

If you want your app to show at the top of the page in Google’s listings, then you need to add a genuine page title and descriptions for each and every page.

Or else all the pages will appear in the same listing of the search engine. React will not help in this regard and you cannot even change the tags to React.

JS loads in a different manner

Earlier, Google hasn’t indexed any sort of javascript but now the time has changed as Google now runs Javascript.

But there are still some problems faced by SPAs which are not rendered Server Side:

- Few contents are not read by Google

- Some search engines do not crawl JS

- SPAs are crawled at a slow speed

Challenges with SEO of React Websites

Use of Single Page Application (SPA)

When the World Wide Web was launched for the first time, the websites used to operate in such a manner that the web browser had to send a request to each page.

For simplifying it, the server used to generate HTML and further sent it back after getting each and every request.

For each and every page the browser had to render everything from the starting position. This led to additional pressure on the server as it needed to fully render each page instead of just providing the required information.

Now with the help of modern technology, the loading time has decreased. How did it do that?

For resolving the loading related issue, the developers have introduced JS-based Single Page Applications which is also known as SPA. This is a problem with SEO in React.

They do not reload the entire content, rather they just refresh the content. This technology has played a crucial role in increasing the performance of the websites.

SEO issues with Single Page Applications

As we already came to know that Single Page Applications have improved the performance of the website but there were still some major issues when it comes to SEO. They are:

Lack of dynamic SEO tags

A Single Page Application loads the information dynamically in particular areas of the web page.

So, when the crawler clicks on a particular link, it faces difficulty in completing the page load cycle.

The metadata of the web page that is in place for the search engine cannot refresh.

Due to this, the SPA cannot get displayed by the crawler, and finally, it gets indexed in the form of an empty page. So all these things are not at all good in terms of ranking.

But there is nothing to worry about as the web developers can solve all such issues by creating separate pages for the Google bots.

It also works simultaneously with the Webmaster for discussing how to get the other content indexed in Google.

But creating separate pages will increase the business expenses and it also faces difficulty to rank the website at the top of the search results.

Search Engines Crawling JavaScript Is Precarious

All the SPAs depend on JavaScript for the dynamic loading of the web pages. A Google crawler or any search engine crawler has chances to ignore executing JavaScript.

What bots do is they instantly pick up the web contents that are readily available without allowing JavaScript to operate and finally index the page as per the type of the content.

As per a statement shared by Google in 2015, they would crawl CSS as well as JS on websites only when the search engine bots allow access to them.

The statement seems positive but yes it is risky too. Undoubtedly Google crawler is smart enough and they allow execution of Javascript but one cannot completely rely on a single search engine.

There are many other search crawlers such as Bing, Yahoo, or Baidu which read the sites with JavaScript in the form of empty pages.

To solve this problem, you have to render the content server side for providing the bot something to read. So, SEO in React is not that easy.

How to Make Your React Website SEO-Friendly?

As we have discussed some drawbacks can be bypassed, following are some of the best practices you can perform for solving SEO in React problems.

It will also help you to know – How to Optimize React App while dealing with SEO.

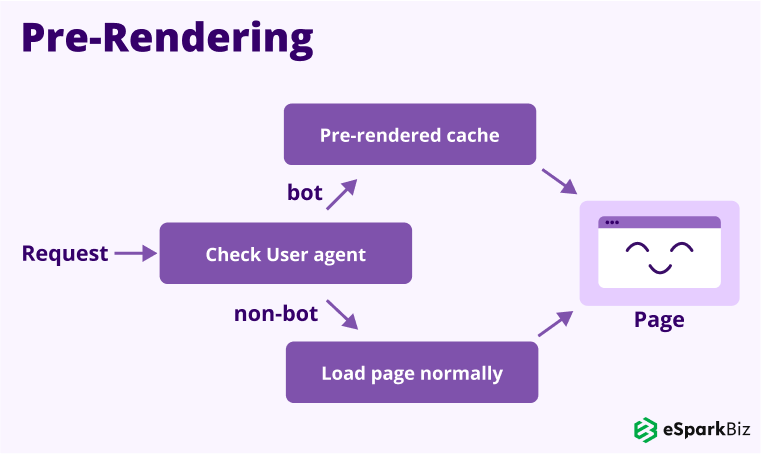

Pre-rendering: Making SPA & Other Websites SEO-Friendly

Pre-rendering is one of the best approaches for making single and multiple page web applications SEO friendly.

This approach is mainly used when search bots or crawlers are not able to render the web pages efficiently. In such apps, one can make use of pre renderers.

Pre renderers are the special programs that intercept requests into the website and if the request is from a crawler then pre-renderer will send a cached static HTML version of your website.

Now if the request is sent by a user, the normal page will get loaded.

Following are the advantages of this approach in making your website SEO friendly:

Advantages Of Pre-Rendering

- Pre-rendering programs are efficient enough in executing various types of modern JavaScript and change them into static HTML.

- It supports recent web novelties.

- Pre rendering approach needs minimal codebase changes or no changes at all.

- It is easy to implement.

But there are some drawbacks too. Have a look at the following points:

Drawbacks Of Pre-Rendering

- This approach is not for the pages which show frequently changing data.

- It consumes too much time if the website is very large and has too many pages.

- The services of this approach are not free.

- The developers have to rebuild the pre-rendered page after changing the content.

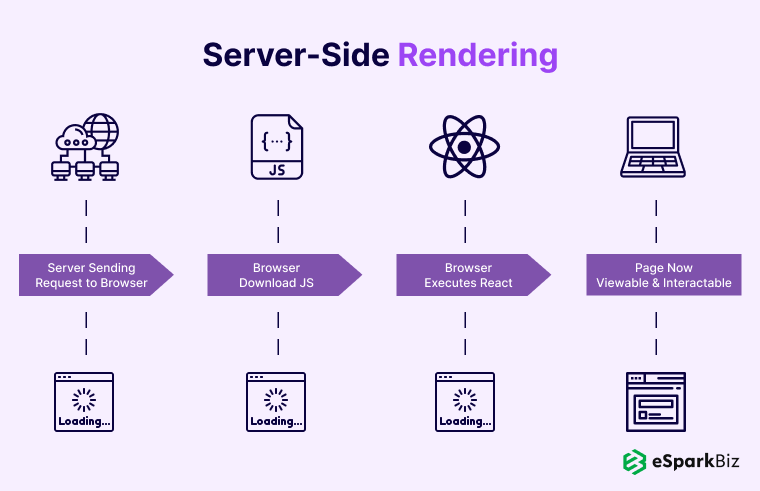

Server-side rendering: Fetching HTML Files With Entire Content

If you want to create a React web app then you have to know the difference between server-side and client-side rendering.

Client-side rendering is a browser, as well as Google bot, that gets empty HTML files or some files with less content.

Now the task of the JavaScript code is downloading the content from the servers and the users are able to see it on the screens.

But client-side rendering faces problems in terms of SEO because Google crawlers don’t find any content or get very less content which cannot be indexed properly.

On the other hand, with server-side rendering, the Google bots, as well as the browsers, will get HTML files along with the entire content. This will help Google bots to index the page without any problem and finally rank it higher.

The latter is one of the easiest ways in creating an SEO-friendly React Website. But if you want to create a Single Page Application that can render on the servers then you have to add another layer of Next js.

Isomorphic React: Detecting The Availability Of JavaScript

The Isomorphic JavaScript technology can automatically detect whether JavaScript is enabled or not on the server-side.

In some cases when JavaScript is not enabled, Isomorphic JavaScript works on the server-side and provides the final content to the client-side server.

So in such a way all the required content, as well as attributes are readily available when the page starts loading. So, you need to know that for SEO in React.

But when JavaScript is enabled, it functions as a dynamic app that has many components.

This would provide faster loading as compared to the traditional websites and the user will experience a smoother experience even in the SPAs too.

Also Read: A Complete Guide On Building React Carousel

Tools to Improve SEO of React Websites

React websites are specifically optimized for Google or other search engines. So some tools are being used in creating websites that are SEO-friendly.

React Router V4: Creating Routes Between Various Pages or Components

React Router is an element for creating routes between various pages or components. This tool will help in developing the websites which have an SEO friendly URL structure.

It is basically a collection of navigational components that helps you in the application declaration. Whenever the question of React rendering arises, React Router can be helpful to the developers.

React Helmet: Managing Metadata Is Easy.!

React Helmet is being widely used for managing the metadata of the corresponding web pages which are being provided through React components.

As it has a library on top of it, React Helmet is very easy to execute on the client as well as the server-side. The USP of React Helmet is its ease of integration feature without any major changes in the coding.

Fetch As Google: Helping Users To Understand Crawling Process

Although Google bots are able to crawl the React apps, it’s important to remain cautious and test the website for the absence or presence of the web crawlers. But now there is a fantastic tool to take care of that.

Provided by Google, Fetch as Google helps its users in testing how Google renders or crawls a URL on the site.

The main function of Fetch as Google is stimulating a render execution as done in the normal crawling as well as the rendering process of Google.

For using this function, you need to have a React web app, and to use it, you have to visit Google Search Console and log in with your Google Account to get access to the tools.

After adding the property in Google Search Console, the Search Console will ask you to verify the particular URL. It’s very helpful if you’re dealing with SEO in React.

Conclusion

Single Page Applications that are developed using React will have great page load time and will help in easier code management because of component-oriented rendering.

Search Engine Optimization of SPAs will take some extra efforts for configuring the app. But with the efficient use of perfect tools as well as libraries, the web community and organizations are preferring to shift to SPAs for excellent load times.

We hope you had a great time reading this article and it proves to be of great value for any React Developer. Thank You.!

Frequently Asked Questions

How Does Google Index JavaScript Pages?

There are some steps that Google Bot follows in order to index the JavaScript pages. They are as follows:

Google Bot downloads HTML files

It loads CSS & JavaScript files

Google Web Rendering Service (WRS) parses, compiles, and executes JavaScript code

WRS fetches data from external APIs and databases

How To Build An SEO-Friendly React Website?

With the help of pre-rendering and server-side rendering, you can easily build an SEO-Friendly React website.

Can A ReactJS Single Page App Be SEO-Friendly?

It’s possible to make a Single Page App (SPA) SEO-Friendly. For that purpose, you need some rules and regulations.

Is React SEO-Friendly?

React is not SEO-Friendly by default. However, you can make it an SEO-Friendly website with the help of some tools.