In the competitive and fast-paced era of today, conventional practices of application development are ceasing to exist. Handling thousands of codes can be challenging. However, the good news is Microservice best practices have made it easy to handle complex development process involving huge set of code.

Today, the development of web applications takes place at a much faster pace. Wondering how it is made possible? That is where microservice architecture comes into the picture.

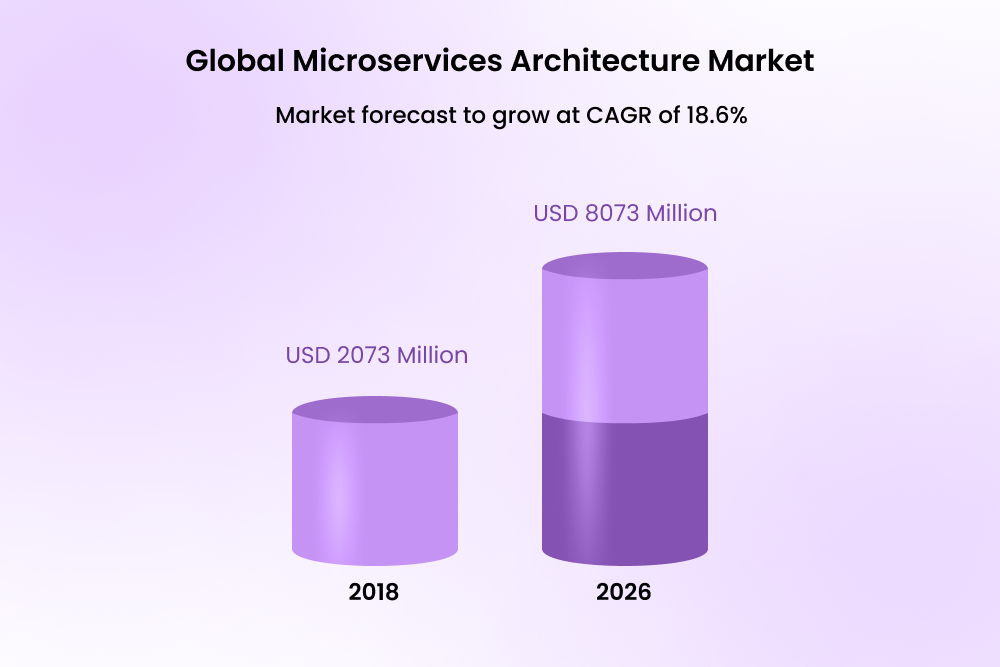

The microservice architecture market is likely to reach USD 8,073 in 2026. It clearly shows the growing demand for microservices in recent times.

In this article, you will explore the benefits of microservices and their best practices for getting excellent outcomes for your apps with less input.

What is Microservice Architecture?

Microservice architecture refers to a method of structuring applications as a collection of services. It divides the large applications into smaller, independent parts, and each part comes with unique responsibilities. In this architecture, each microservice can accommodate a single feature of an application and handle discrete tasks.

Microservice architecture denotes that small and self-contained services carry out single business operations. Moreover, it facilitates the speedy development of complex and large applications.

In simple words, microservice architecture structures apps as a collection of services that are:

- Self-sufficiently deployable

- Managed and owned by a small team

- Easily maintainable and testable

- Organized and formed around the abilities of a business.

Benefits of Embracing Microservice Architecture

Still not convinced? Let’s dive into the prominent benefits of using the architecture.

Versatility of the stack

In a microservice architecture, each module serves as a miniature application. As a result, it allows you to build it using different frameworks, technologies, and programming languages.

It provides the tech teams with the flexibility to choose the best-fitting tech stacks for individual components. Moreover, it helps avoid any compromises to the application due to outdated stacks.

Easy application management

No matter what type or size of app you are building, it is pretty simple and easy to manage if you choose microservice architecture. Wondering how?

Well, the architecture allows better collaboration among the team members. Moreover, you have the flexibility to choose the programming languages of your choice. As a result, the development and management of applications become easier.

Fault isolation

In the traditional monolithic design, the failure of a single component can affect the entire system. However, that is not the case with microservice architecture.

As each of the microservices functions independently, the failure of any microservice will not affect the whole application. Moreover, tools like GitLab can empower you to create fault-tolerant microservices and enhance infrastructure resilience.

Better scalability

When it comes to scaling your application, microservice architecture outsmarts monolithic systems. Wondering how? Well, you no longer struggle to ensure smooth operations during traffic spikes.

In a microservices architecture, dedicated resources are assigned to individual services. As a result, you do not have to worry about widespread disruptions.

Also, there are tools like Kubernetes that allow efficient management of resources. So, you can expect significant cost reductions.

Moreover, microservices simplify the process of updating your application. Therefore, they are an ideal choice for organizations that grow at a rapid pace.

Greater modularity

Microservices offer improved modularity and ensure faster modification of applications. It breaks down the applications into various smaller components. As a result, implementing the changes with less risk and in much less time becomes possible.

Moreover, the self-contained nature of the architecture allows you to easily understand its functionality in a hassle-free manner. So, integrating them with larger applications becomes simple and efficient.

Compatible with Cloud, Kubernetes, and Docker

Kubernetes and Docker play a crucial role in orchestrating containers. They ensure high availability, robustness, and scalability. Moreover, you can expect seamless load balancing across different hosts. That is why it has become a must-have toolkit for organizations.

Efficient testing

When developing applications, testing is a step that you cannot overlook. Microservice architecture facilitates the testing of individual components and speeds up the overall testing process.

The modular approach of this architecture helps streamline the detection and resolution of potential bugs. As a result, it minimizes the risk of one service affecting the other. Moreover, it allows smoother updates and maintenance of systems.

In addition to that, the microservice architecture also supports concurrent testing. That means multiple team members can work simultaneously on different services.

Let’s take the example of Capital One, a leading financial institution in the United States. They leveraged cloud-native microservice architecture and made use of Docker containers in addition to Amazon Elastic Container Service (ECS). The microservice best practices enabled the firm to decouple apps.

However, Capital One came across several issues. First, multi-port routing for the same server was challenging. Second, context-based routing of services was a problem.

To overcome such difficulties, tools like Consul and Nginx could be a solution. However, they would add to the operating expenses and complexities.

Therefore, the company went ahead with AWS elastic load balancing. It helped the firm with the automatic registration of Docker containers, dynamic port mapping, and path-based routing.

A vital lesson from Capital One is that it is possible to achieve great results with minimal effort. The 80/20 rule, or Pareto Principle, also supports this.

Challenges of Microservice Architecture

No doubt, leveraging the microservices architecture can offer you several benefits. However, there are certain limitations too. Some of the top challenges you are likely to come across include:

- Increase in topological complexity

- Data incompatibilities and translation can be issues.

- It may give rise to network congestion.

- Uncertainties relating to timeframes, data security, and costs

- The cost of building scalable apps may be higher.

Microservice Best Practices You Must Know

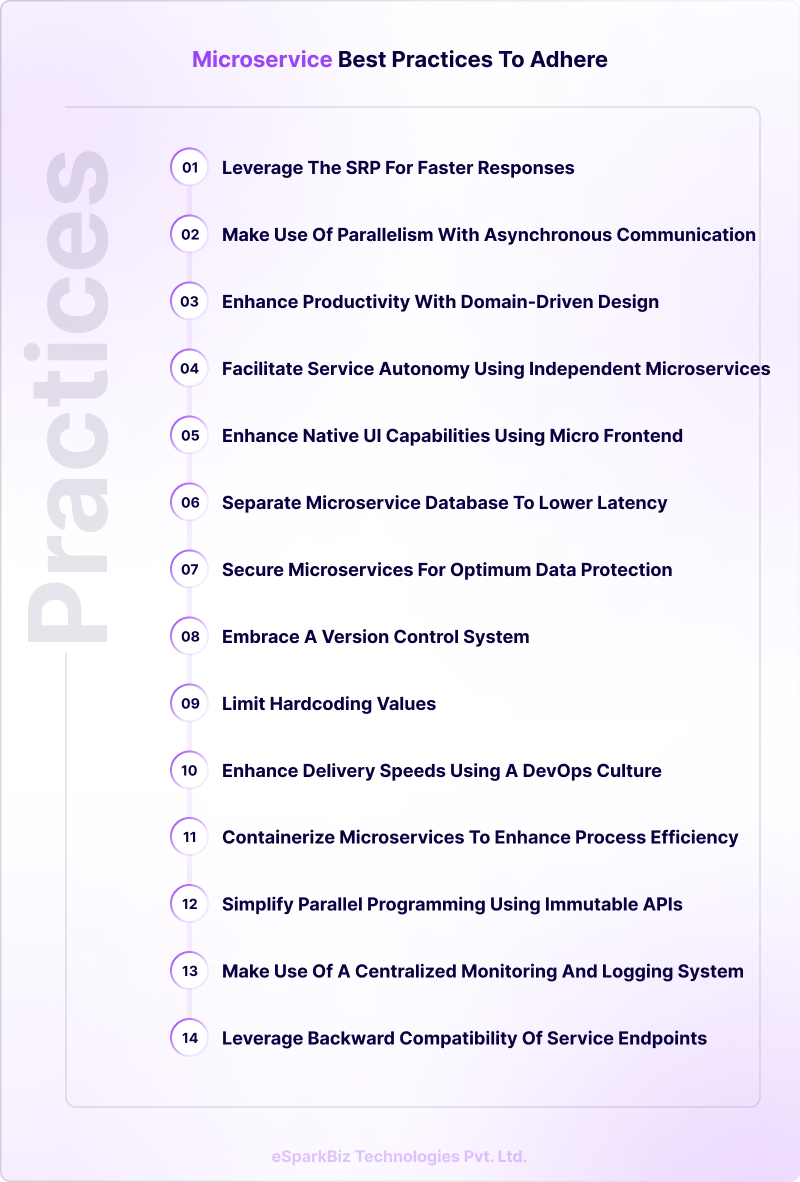

Leverage the single responsibility principle (SRP) for faster responses

Wondering what SRP is? In simple terms, it is a microservice design principle in which each class or module focuses on doing only one thing assigned. Each function or service comes with a unique business logic associated with the task.

The use of SRP can lower dependencies significantly. As each function performs a specific task only, there is no overhead.

It brings down the waiting time for individual services and lowers the response time. As a result, it can respond to different user requests faster.

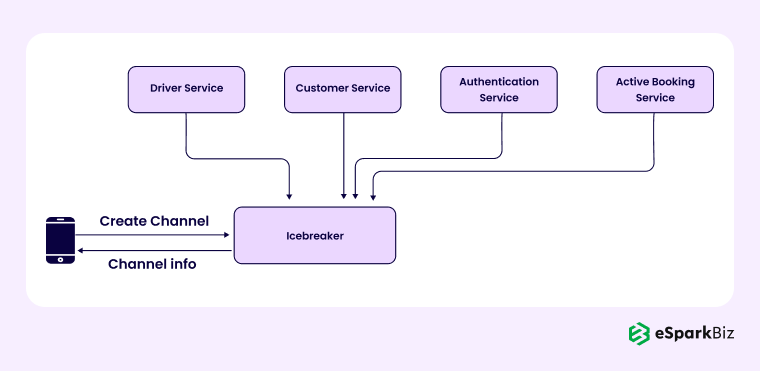

Example: Gojek achieves a lower response time and high reliability with SRP.

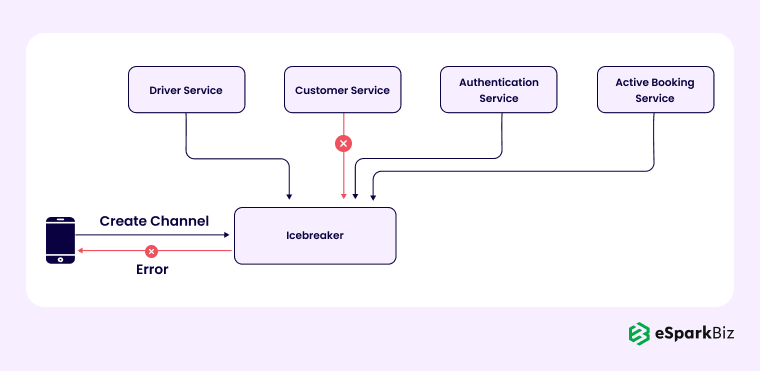

Gojek is a popular marketplace connecting motorcycle riders and drivers. The chat feature of the platform enables users to interact with the drivers through the “Icebreaker” application.

However, the heavy reliance of the Icebreaker on services for communication channel development was an issue for Gojek. For the creation of the channel, Icebreaker had to perform several tasks:

- Fetching the profile of customers

- Authorizing API calls

- Developing a communication channel

- Fetching the details of the driver

- Verifying whether the profile of the driver and customer matches.

The main issue with high dependency is that the failure of one service would fail the whole chat function.

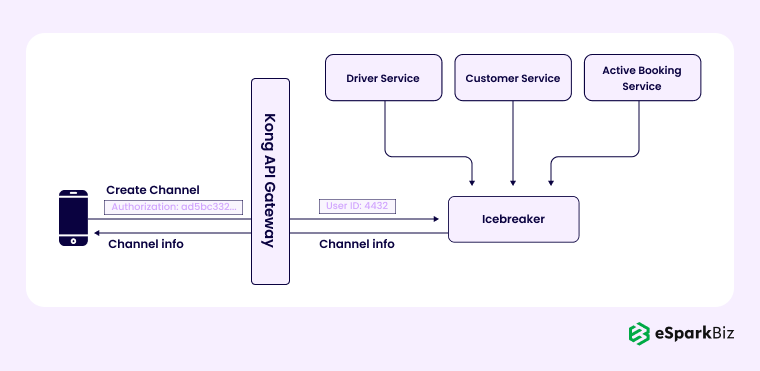

Therefore, to address this problem, Gojek decided to leverage the single responsibility principle. It will add services to individual functions, assign tasks, and lower the load on a single service.

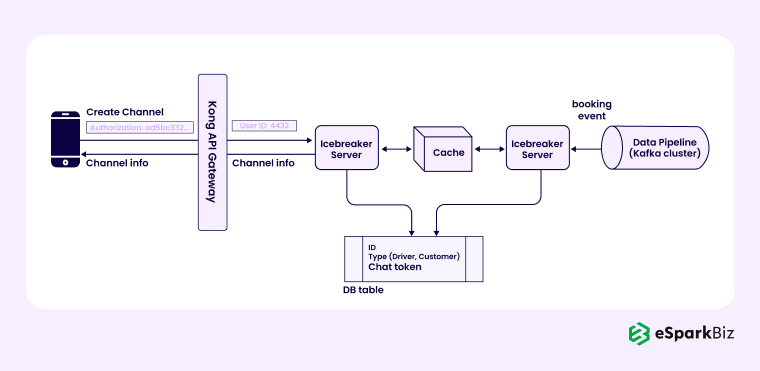

- Profile retrieval – There was a need for chat tokens from the user’s and driver’s data to be stored in separate databases. It would then be stored in the data store of Icebreaker. As a result, it eliminates the need for any retrieval for channel creation.

- API call function – For the authentication of functionality, Gojek needed a Kong API gateway.

- Active booking – It was essential for Icebreaker to check whether an active booking was created when the user clicked on the channel creation API. To lower dependency, the Gojek team made use of the worker-server approach.

So, whenever there is an order, the worker will develop a communication channel and store it in the Redis cache. The server will push it forward as per the requirement.

The use of the single responsibility principle enabled the firm to lower its response time by about 95%. As a result, satisfying the customers became simple and easy.

Have a dedicated infrastructure

When you have a poor design of the microservice’s hosting platform, you are not likely to get the desired outcomes. Therefore, separating the microservice infrastructure from all other components is essential. It can help in enhancing the overall performance and ensuring fault isolation.

Moreover, you must even isolate the infrastructure of the different components that your microservice relies on. To understand this better, let’s use an example.

Suppose you want to build an application for ordering pizza. Based on the functionality, there will be several components, like the order service, inventory service, user profile service, payment service, delivery notification service, and more.

Let’s assume that the inventory microservice makes use of an inventory database. So, it is not just essential to have a dedicated host machine for the inventory service but also for the inventory database.

Embrace a version control system

If you are willing to develop cloud-native software, microservice version management is a must. You will have to manage thousands of objects that form part of the software supply chain.

Are you wondering what you mean by version control in microservices? It is an approach that enables the team members to maintain several services with the same functionality.

Version control includes keeping track of changes in the attributes and containers of the applications. Some of the prominent attributes may involve licenses, software bills of materials, key-value pairs, and more.

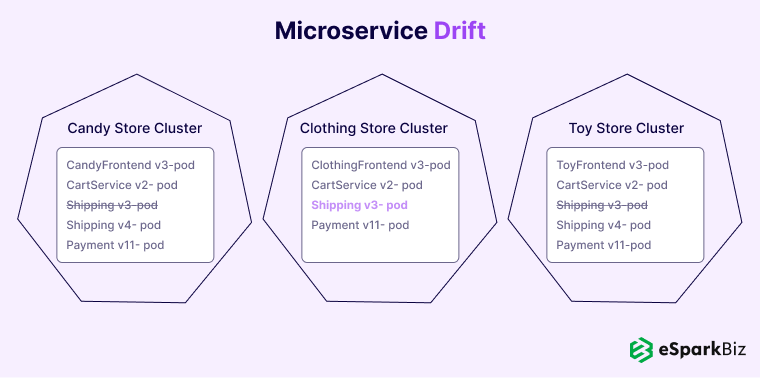

Versioning to Lower Microservice Drift

During the implementation of microservices, you are likely to experience a phenomenon known as “Drift”. It usually occurs when several versions of a single microservice run on several namespaces and clusters.

Still confused? To understand this better, let’s take the case of an online store selling clothing, toys, and candies in different clusters. However, all three leverage a common microservice for shipping.

You may come across a drift in the clothing store for shipping. A simple way to avoid the drift is to leverage the same version across all the stores. However, it might not be helpful in environments that aren’t ready for deployment.

Therefore, to ensure effective management of different versions across environments, you will have to rely on microservice versioning. To successfully implement a microservice versioning system, you can leverage different approaches. It includes header versioning, URI versioning, calendar versioning, and semantic versioning.

Header Versioning

This approach makes use of the HTTP protocol header attribute to relay version information. It leverages the content-version attribute for specifying the service.

The main advantage of header versioning is its naming conventions. It allows maintaining the location and names of app resources across all updates. The approach makes sure that the URI is not cluttered and that the API names retain their semantic meaning.

However, you may come across several configuration issues when using header versioning. That is where semantic versioning proves to be more beneficial.

URI Versioning

URI, or Uniform Resource Identifier, helps connect abstract or physical resources. It is a string of characters that serve as an identifier for a scheme name or file path.

The best thing is that you can directly add version information to the URL of the service. It proves to be a faster way of identifying specific versions.

The testers or development team of an organization can search for a Uniform Resource Locator and easily identify the version. However, managing and scaling the name convention using URI can be challenging. That is where header versioning is an ideal option.

Calendar Versioning

Calendar versioning is an approach in which you can leverage date formats for naming different versions. So, by viewing the version names, you can get an idea about the year of deployment.

For instance, if you want to name a version developed in 2020 and determine two error patches, you can name it 4.0.2.

Semantic Versioning

This versioning approach makes use of three non-negative integer values for the determination of version types. It includes minor, major, and patch. The involvement of three integer values makes it easier to understand versioning in a hassle-free manner.

However, if a previous version is compatible with the new version and certain logic of the service changes, you can simply number it. That means you just need to add a number whenever there is a requirement to add patches or fix code.

For instance, when you name a new version v1.0.1, it denotes slight changes to the previous version.

Use of parallelism with asynchronous communication

The lack of proper communication between two or more services impacts the performance of microservices significantly. Therefore, there are two communication protocols for microservices.

The first protocol is synchronous communication. Wondering what it is? It is a blocking-type communication protocol in which microservices form a chain of requests.

Synchronous communication lags in performance and comes with a single point of failure. However, it still proves to be beneficial for organizations.

The second protocol is asynchronous communication. It is a non-blocking protocol following an event-driven architecture. It facilitates parallel execution of user requests and offers better resilience.

When it comes to improving communication between microservices, asynchronous communication proves to be a better option. It lowers coupling during the user request execution.

Example: Real-time broadcast of Flywheel Sports with the use of better microservice communication

Flywheel Sports was about to launch a new platform, “FlyAnywhere.” The core aim of the company was to enhance the overall bike riding experience of the fitness community through real-time broadcasts.

The engineers at Flywheel made use of the microservice architecture and a modular approach to develop the platform.

However, certain communication challenges led to system availability issues and network failures. To address the issues, they prepared a checklist of features, including messaging, logging, queuing, service discovery, and load balancing.

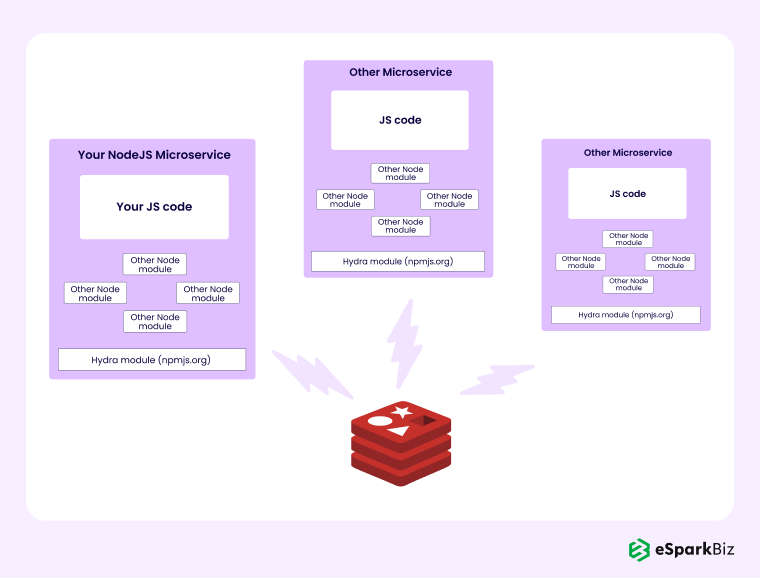

Therefore, they developed an internal library named “Hydra.” The library supports all the above features, along with Redis clusters. Each microservice was tied to a shared Redis cluster and used sub/pub for inter-process communication maintenance.

Moreover, Hydra helped mitigate single-dependency issues. It offered features like service discovery, inter-service communication, load routing and balancing, job queues, and self-registration of services.

Asynchronous communication enabled Flywheel to establish a strong foundation to deliver real-time broadcasts to the consumers of FlyAnywhere.

Enhance productivity with Domain-driven design (DDD)

Organizations must design microservices around business capabilities with the use of DDD. The use of DDD can ensure high-level functionality coherence and offer loosely coupled services.

For every DDD model, there are two phases. It includes tactical and strategic aspects. The tactical phase enables the creation of a domain model with the use of different design patterns. On the other hand, the strategic phase makes sure that the design architecture comprises the business capabilities.

The prominent design patterns that facilitate designing loosely coupled microservices are aggregates, entities, and domain services.

Example: SoundCloud reduces the release cycle with the use of DDD.

The service architecture of SoundCloud follows the backend-to-frontend pattern. However, there were several concerns and complications relating to duplicate codes. Moreover, they made use of the BF Pattern for authorization logic and the business, which was quite risky.

So, they decided to switch to the domain-driven design pattern and build a new approach called “Value Added Services (VAS)”.

This VAS has three tiers. The first tier is the Edge layer. It serves as an API gateway. The second tier is the value-added layer. It processes data from various resources and delivers rich user experiences. Finally, the foundation is the third layer, offering the building blocks for the domain.

SoundCloud leverages VAS as an aggregate within the DDD. VAS separates the concerns and offers a centralized orchestration. It has the potential to orchestrate calls and execute authorization for metadata synthesis.

By leveraging the VAS approach, SoundCloud was successful in lowering recycle cycles and enhancing team autonomy.

Break down the migration into several steps

If you are not proficient in handling the migration or are doing it for the very first time, you must know that it isn’t easy. When it comes to monolithic architectures, they involve a lot of repositories, monitoring, deployment, and various other complex tasks. So, migrating or changing all of these can be quite challenging and leave behind some gaps and errors.

Are you wondering how to handle such a situation? Well, all you have to do is retain the monolithic structure and build any additional capability as a microservice.

Once you have sufficient new services, you can determine ways to break down the old architecture into different relevant components and start migrating them one by one.

Facilitate service autonomy using independent microservices

One of the prominent microservice best practices is the use of independent microservices. Independent microservices are a way of taking service isolation a step higher.

You can obtain three forms of independence by leveraging independent microservice best practices. It includes:

- Independent testing – It refers to the process of performing tests that prioritize service evolution. As a result, you can expect fewer test failures due to service dependencies.

- Independent service evolution – It is a process that isolates feature development based on evolutionary needs.

- Independent deployment – It lowers the chances of potential downtime due to service upgrades. If you have a cyclic dependency during the deployment of the app, it proves to be even more beneficial.

Example: The independent microservices’ management issues and single-purpose functions of Amazon

In 2001, the developers at Amazon realized that it was challenging to maintain a deployment pipeline with the use of a monolithic architecture. Therefore, they switched to a microservice architecture. However, the migration resulted in the emergence of several other issues.

The team members of Amazon decided to pull single-purpose units and wrap them using a web interface. No doubt, it was an efficient solution. However, managing the single-purpose function was another issue.

Moreover, merging the services was not a solution, as it increased the complications even more. Therefore, Amazon’s development team built an automated deployment system named “Apollo.”

They also set a rule that all-purpose functions need to communicate through a web interface API. A set of decoupling rules was even established that every function needed to adhere to.

Eventually, Amazon was successful in lowering manual handoffs and enhancing the efficiency of the system significantly.

Limit hardcoding values

One of the most popular microservice best practices is to avoid hardcoding values as much as possible. It ensures that the network-based changes do not affect system-wide issues. To understand this better, we will use an eCommerce application.

Whenever a user adds products to the shopping cart and makes the payment, customer service will get the delivery request. Traditionally, developers hardcode the address of the shipping service. So, in the event of any changes to the network configuration, it becomes tough to connect with the shipping service.

Therefore, to eliminate such hassles, it is best to make use of a network discovery mechanism with the use of a proxy or service registry. With the integration of the network discovery tools, it becomes easier to connect and execute different functions.

Enhance native UI capabilities using a micro-frontend

Micro-frontend architecture refers to a process of breaking down a monolithic frontend into various smaller elements. It supports microservice architecture and enables upgrading individual UI elements. Using this approach will enable you to conveniently make changes to different components, test the components, and deploy them.

Moreover, leveraging micro frontend architecture makes it possible to develop native experiences. For instance, it allows the use of simple browser events for easy communication.

Micro-frontends can also enhance CI/CD pipelines and ensure faster feedback loops. It allows you to build a frontend that is agile and scalable.

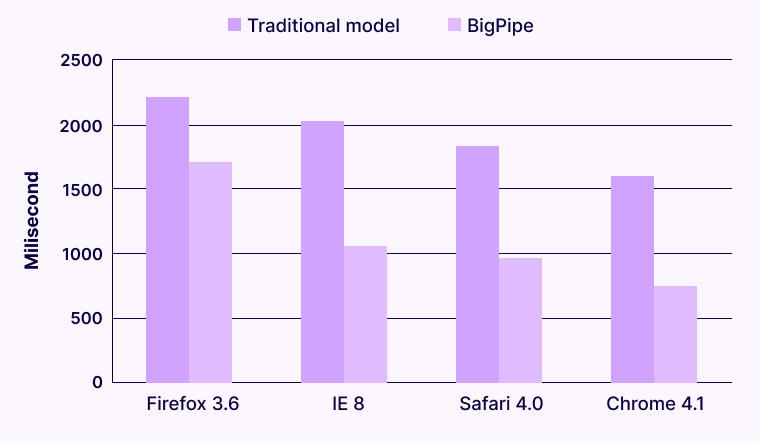

Example: Facebook upgraded web page latencies using Bigpipe.

In 2009, Facebook was having issues with the front end of its websites. Therefore, it was in search of an ideal solution to lower the loading times.

In traditional front-end architecture, there was a need for page generation and overlapping browser rendering optimizations. These were the only solutions to lower the response time and latency.

That is why Facebook built BigPipe, a micro-frontend solution. It enabled Facebook to break down web pages into several smaller components known as “pagelets.”

With the use of BigPipe, Facebook was successful in improving the latency of web pages across browsers. The modern micro-frontend architecture has evolved a lot and now supports different use cases like mobile applications and web apps.

Separate microservice database to lower latency

No wonder the microservices are loosely coupled. However, there is a need to retrieve data from the same datastore using a shared database.

In such cases, the database needs to deal with several latency issues and data queries. A distributed database for microservices can be an ideal solution.

Having a separate microservice database enables the services to store data locally in a cache. It helps reduce latency significantly. As there is no single point of failure, resilience and security improve.

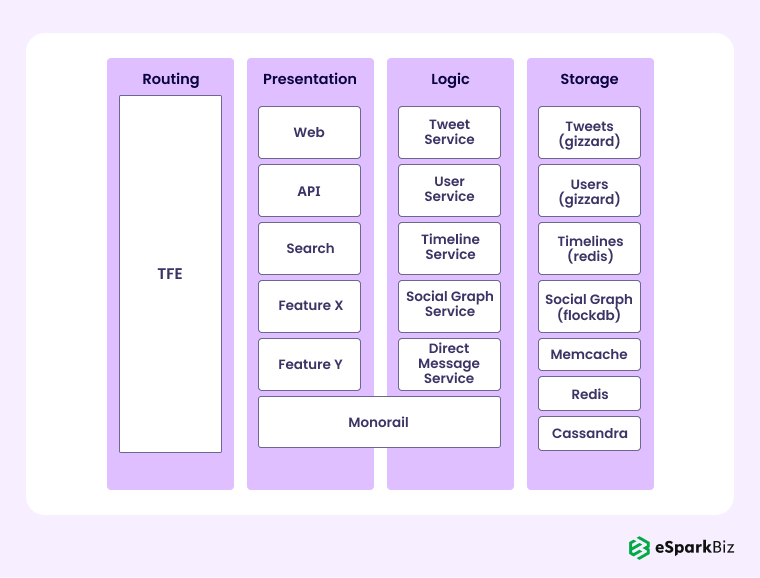

Example: Twitter improved its processing ability with a dedicated microservice datastore.

In 2012, Twitter made a switch from a monolithic software architecture to a microservices architecture. It leveraged several services, like Cassandra and Redis, to handle nearly 600 requests per second.

However, during the scaling of the platform, there was a need for a resilient and scalable database solution. The core aim was to ensure that it was capable of handling more queries in a second.

Twitter was initially developed on MySQL and migrated from a small database instance to a bigger one. It resulted in the creation of several large database clusters. As a result, moving data across instances became time-consuming.

To address this issue effectively, Twitter had to incorporate some changes. The first change was the introduction of a framework named Gizzard. It allowed the creation of distributed data stores. Moreover, it served as a middleware networking service and effectively handled failures.

In addition to that, Twitter introduced Cassandra as its data storage solution. The data queries were effectively handled by Cassandra and Gizzard. However, the latency issue continued. There was a need to process millions of queries in a second with a low latency rate.

Therefore, the tech team at Twitter had to develop “Manhattan,” an in-house distributed database. It could handle several queries in a second and improve latency at the same time. The distributed system enabled Twitter to improve its availability, latency, and reliability.

Moreover, Twitter migrated its data from MySQL to Manhattan and leveraged additional storage engines to serve various traffic patterns.

The use of Redis and Twemcache was another key aspect of the dedicated database solution on Twitter. It provided optimum protection to the backing data stores against heavy read traffic.

With the dedicated microservice database approach, Twitter was able to handle:

- Over 1000 databases

- Over 20 production clusters

- Tens of millions of queries in a second

- Tens of thousands of nodes

Pair the right technology with the relevant microservice

Often, team members do not give importance to the language or technology. However, doing so can give rise to several issues later. Therefore, leveraging the right technology is the need of the hour.

With so many technologies available, selecting the best one can be a little tough. To make your task easy and ensure the right selection, you can factor in aspects like scalability, maintainability, cost of architecture, ease of deployment, and fault tolerance.

Secure microservices for optimum data protection

Microservices communicate with external platforms and services through APIs. Therefore, it is vital to secure such communications.

Compromising data has become quite common in recent times. Moreover, malicious users can gain control over the services and affect the operations of the application. That is why taking appropriate measures is the need of the hour.

Data breaches can cause huge financial losses to your business. To avoid such a situation, microservices security is crucial for your business. Some of the prominent ways to secure microservices include restricting data access, multi-factor authentication, vulnerability scanning, TLS/SSL encryptions, penetration testing, and web application firewalls.

Example: OFX secured microservices using a middle-tier security tool.

OFX, an Australian international financial transfer institution, engages in the processing of over $22 billion worth of transactions every year. When OFX migrated to the cloud environment, it required a highly secure solution to ensure protection against cyber threats and boost visibility.

The external services and partners of OFX communicate with the microservices via APIs in an internal network. That is why there is a need to enhance visibility and improve security to verify the different access requests from external platforms.

To address this problem, OFX made use of a security tool to gain visibility of various aspects, like

- Blocking unusual and malicious traffic

- Monitoring login attempts

- Detection of different suspicious patterns

- Extensive penetration testing

Through the addition of a security tool, the cloud architects and security team at OFX were able to monitor and track API interactions. It played a pivotal role in securing microservices and detecting anomalies.

Enhance delivery speeds using a DevOps culture

DevOps is a set of practices that breaks down siloed development and operational capabilities. The core aim is to enhance interoperability. Embracing DevOps can provide organizations with several benefits.

One of the prominent benefits is that it offers a cohesive strategy for your organization and ensures efficient collaboration.

Example: DocuSign lowered errors and enhanced CI/CD efficiency using DevOps.

DocuSign launched the e-signing technology with the use of an Agile process for software development. However, it experienced failures owing to the lack of effective collaboration among the individual teams.

The business model of DocuSign, which involved signatures and contracts, required continuous integration. Moreover, the exchange of approvals and signatures must be error-free. It is crucial, as a single misattribution can result in serious consequences.

Therefore, DocuSign embraced the DevOps culture to enhance collaboration among the team members. However, despite the culture shift, the CI/CD problem still existed. DocuSign made use of application mocks to support continuous and effective integration.

The application mock provides a mock response and endpoint. DocuSign blends it with incident management and tests the different apps through simulations. It allowed the platform to build, release, and test applications in much less time via a cohesive strategy.

Simulations made it possible to test the behavior of the applications in real-life scenarios. Moreover, making quick changes and improving fault isolation became easier. So, DocuSign was able to continuously integrate changes and test apps in a hassle-free manner.

Containerize microservices to enhance process efficiency

Microservice containerization is indeed one of the most effective microservice best practices. Containers enable you to easily package the bare minimum of binaries, libraries, and program configurations. Therefore, it is lightweight and easily portable across different environments.

Also, containers share the operating system and kernel. As a result, it lowers the need for resources for individual operating systems.

Containerization can provide organizations with several benefits. It includes:

- Higher consistency of data owing to the shared operating system

- Isolation of processes with minimal resources

- Quicker iterations and optimization of costs

- Smaller memory footprint

- There is no impact of any sudden change in the external environment.

Example: Spotify migrated 150 services to Kubernetes to process 10 million QPS.

Spotify has been engaging in the containerization of microservices since 2014. However, by the year 2017, they got to know that Helios, their in-house orchestration system, was not ideal for quick iteration.

To address the efficiency of Helios, Spotify decided to migrate to Kubernetes. However, to avoid placing everything in a single basket, the engineers at Spotify migrated only some services to Kubernetes.

The tech professionals invested a lot of time in thoroughly understanding the key technical challenges they would come across. After a proper investigation, they leveraged the Kubernetes APIs and other extensibility features for effective software integration. In the year 2019, Spotify accelerated its migration to Kubernetes. Overall, they migrated more than 150 services, with the capability of handling 10 million requests in a second.

Leverage the backward compatibility of service endpoints

Microservice versioning plays a vital role in the effective management of different renditions of the same service. Moreover, it also improves backward compatibility. Besides that, there are several other ways to enhance backward compatibility.

Now you must be wondering why backward compatibility matters so much. It is essential because it ensures that the system doesn’t break when there is any change. That means you can easily make changes to the services without breaking the system.

One of the best ways to enhance backward compatibility is to simply agree to all the contract services. However, if one service breaks the contract, it is likely to raise issues for the entire system.

For instance, Restful web APIs are vital for effective communication among distributed systems. Making updates to the APIs may break the system.

To avoid such problems, it is best to make use of Postel’s Law or the robustness principle. It is an approach in which you need to be conservative about what you send but liberal about what you receive.

For different web apps, you can leverage this principle in the following manner:

- Every API endpoint requires just one CRUD operation. Clients are responsible for the aggregation of multiple cells.

- Servers must communicate the messages in the expected format and adhere to them.

- You must ignore unknown or new fields present in message bodies that are likely to cause errors in APIs.

Simplify parallel programming using immutable APIs

Both immutability and microservices share the concept of parallelism that supports the Pareto Principle. It also helps organizations accomplish more in less time.

Wondering what immutability is? It is a concept in which objects or data that are once created cannot be modified. Therefore, parallel programming proves to be a better option when leveraging microservice architectures.

To gain a better understanding of how immutability improves latency and security, let’s take an example. Consider an eCommerce web app that requires the integration of third-party services and external payment gateways.

To integrate external services, you will require APIs for data exchange and microservice communication. Conventionally, APIs are mutable and offer the ability to create mutations as per the requirements.

However, mutable APIs are susceptible to cyberattacks. Malicious users or hackers may inject malicious codes and gain access to shell data.

The good news is that immutability using containerized microservices can help improve data integrity and security. It makes it easier for you to eliminate faulty containers instead of trying to upgrade or fix them. Immutable APIs enable eCommerce platforms to secure the data of users in a hassle-free manner.

Parallel programming is one of the key benefits of leveraging immutable APIs. A major red flag of concurrent programs is how any changes to one thread impact the other. It results in several complications for programmers.

However, an immutable API can help fix this issue. Wondering how? It does so by limiting the side effects of one thread on the other.

If one thread requires any alteration, a new thread will be created simultaneously. It facilitates the execution of several threads in parallel and improves the efficiency of programming significantly.

Monitor the reliance on open-source tools

It is no surprise that developers make use of several open-source microservice tools for monitoring, security, logging, and debugging. However, a point to note here is that over-reliance on such tools can interfere with the safety and performance of the architecture. Therefore, it is crucial to limit their use.

Based on your unique development needs and the different types of tools you are leveraging, be sure to implement organizational policies relating to their usage. For instance, you can establish governance for exception processing or invest time to gain a better idea of the open-source software supply chain.

Use a centralized monitoring and logging system

When you open the Netflix page, you can easily find many functionalities on the home page. Right from profile management to one-click play options and a series of recommendations, you can find everything. This is possible because the Netflix team executes all these functions across different microservices.

Monitoring and logging systems enable organizations to handle several microservices in a distributed architecture. However, having a centralized system can prove to be even more beneficial for your business. It allows you to handle errors and track changes better.

As a result, it helps improve observability. In addition to enhancing improvability, centralized monitoring, and logging, it allows the team members to carry out root cause analysis effectively.

Conclusion

The demand for microservice architecture is on the rise for developing scalable applications. It helps speed up the app development process and meet the benchmark. Moreover, it makes fault and error identification easier, thereby allowing you to launch flawless applications in the target market.

By now, you must have a comprehensive idea about microservice best practices. It is time to leverage them for your organization and see the results. From separating microservice databases to containerizing microservices and simplifying parallel programming, the practices are many. Implementing these best practices can help lower your efforts, save your valuable time, and maximize gains.

Are you in search of the best microservice solution? Be sure to hire reputed and experienced experts in the industry for the best outcomes.